Ai Solution

Home > 제품소개 > Ai Solution

-

FLEX-BX210-Q470

Overview

» Supported CPUs:

Intel® Core™ i3-10320 3.8 GHz (up to 4.6 GHz, quad-core, 65W TDP)

Intel® Core™ i5-10500TE 2.3 GHz (up to 3.7 GHz, 6-core, 35W TDP)

Intel® Core™ i7-10700TE 2.0 GHz (up to 4.4 GHz, 8-core, 35W TDP)

» Four hot-swappable HDD/SSD bays with RAID 0/1/5/10 support

» Equipped with PCIe 3.0 x4 slots and PCIe 3.0 x8 slots

» M.2 2280 PCIe Gen 3 x4 supporting NVMe

» Support IEI Mustang accelerator cards

-

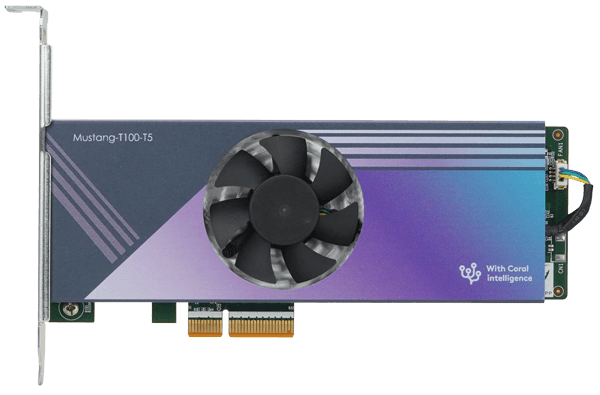

Mustang-T100-T5

Overview

» 5 x Google Edge TPU ML accelerator

» 20 TOPS peak performance (int8)

» Host interface PCIe Gen2 x 4

» Low-profile PCIe form factor

» Support Multiple card

» Approximate 15W

» RoHS compliants

-

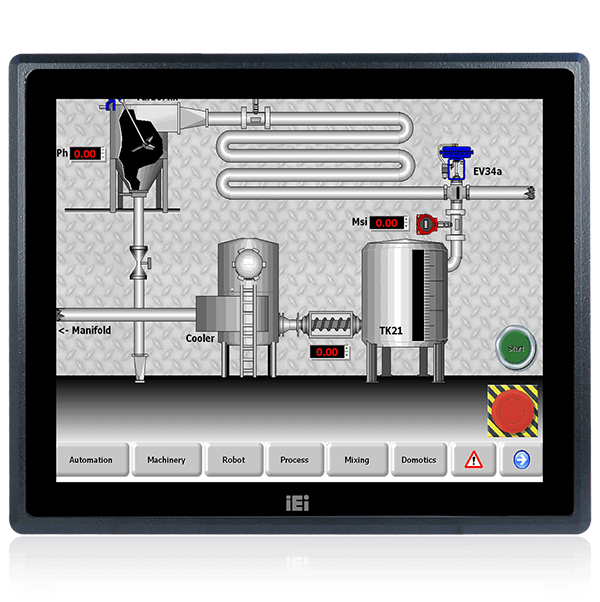

PPC-F17B-BT [ KC인증 ]

Overview

» Support IEI iRIS-2400 remote intelligent solution

» Intel® Celeron® J1900 quad-core SoC

» Robust IP65 aluminum front bezel

» Aesthetic ultra-thin bezel for seamless panel mount installation

» Projected capacitive multi-touch and resistive single touch options

» Dual full-size PCIe Mini card expansion

» 9V~36V DC wide range DC input

-

PPC-F10B-BT [ KC인증 ]

Overview

» 5.7”, 8” and 10.4” fanless industrial panel PC

» 2.0 GHz Intel® Celeron® J1900 quad-core processor or 1.58 GHz Intel® Celeron® N2807 dual-core processor

» Low power consumption DDR3L memory supported

» IP 65 compliant front panel

» 9 V~30 V wide DC input

» mSATA SSD suppoted

» Dual GbE for backup

- » -10°C~50°C extended operating temperature

-

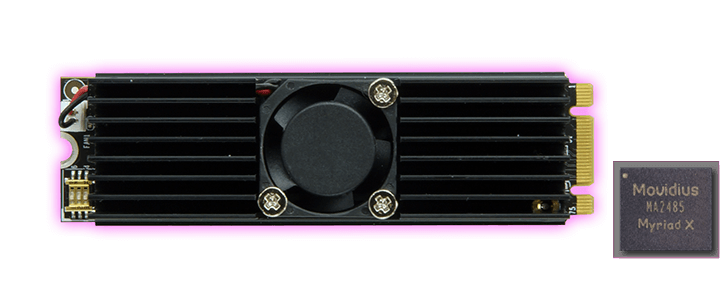

Mustang-M2BM-MX2 [ SAMPLE ]

Overview

•Operating Systems

Ubuntu 16.04.3 LTS 64bit, CentOS 7.4 64bit,Windows® 10 64bit

•OpenVINO™ toolkit

◦Intel® Deep Learning Deployment Toolkit

- Model Optimizer

- Inference Engine

◦Optimized computer vision libraries

◦Intel® Media SDK

•Current Supported Topologies: AlexNet, GoogleNetV1/V2, MobileNet SSD, MobileNetV1/V2, MTCNN, Squeezenet1.0/1.1, Tiny Yolo V1 & V2, Yolo V2, ResNet-18/50/101

* For more topologies support information please refer to Intel® OpenVINO™ Toolkit official website.

•High flexibility, Mustang-M2BM-MX2 develop on OpenVINO™ toolkit structure which allows trained data such as Caffe, TensorFlow, MXNet, and ONNX to execute on it after convert to optimized IR.

-

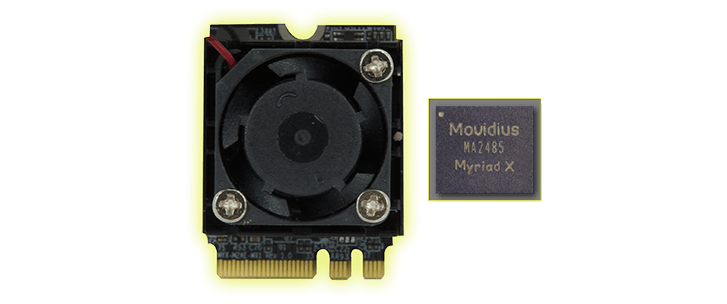

Mustang-M2AE-MX1 [ SAMPLE ]

Overview

•Operating Systems

Ubuntu 16.04.3 LTS 64bit, CentOS 7.4 64bit, Windows® 10 64bit

•OpenVINO™ toolkit

◦Intel® Deep Learning Deployment Toolkit

- Model Optimizer

- Inference Engine

◦Optimized computer vision libraries

◦Intel® Media SDK•Current Supported Topologies: AlexNet, GoogleNetV1/V2, MobileNet SSD, MobileNetV1/V2, MTCNN, Squeezenet1.0/1.1, Tiny Yolo V1 & V2, Yolo V2, ResNet-18/50/101

* For more topologies support information please refer to Intel® OpenVINO™ Toolkit official website.

•High flexibility, Mustang-M2AE-MX1 develop on OpenVINO™ toolkit structure which allows trained data such as Caffe, TensorFlow, MXNet, and ONNX to execute on it after convert to optimized IR.

-

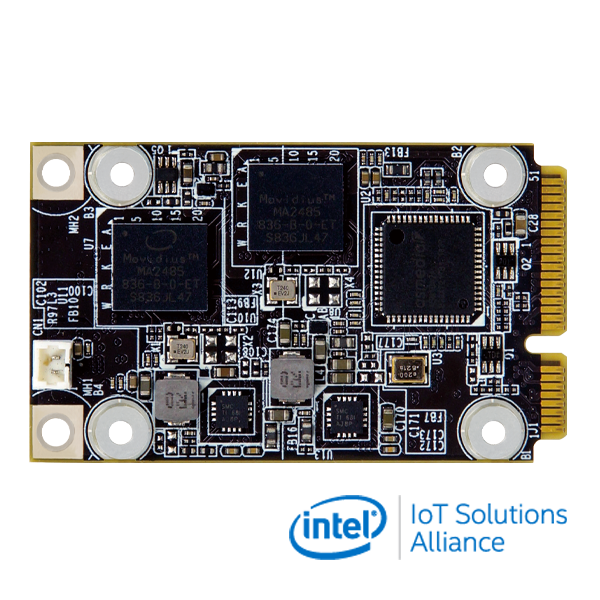

Mustang-MPCIE-MX2 [ KC인증 ]

Overview

● miniPCIe form factor (30 x 50 mm)● 2 x Intel® Movidius™ Myriad™ X VPU MA2485● Power efficiency ,approximate 7.5W● Operating Temperature -20°C~60°C● Powered by Intel’s OpenVINO™ toolkit

-

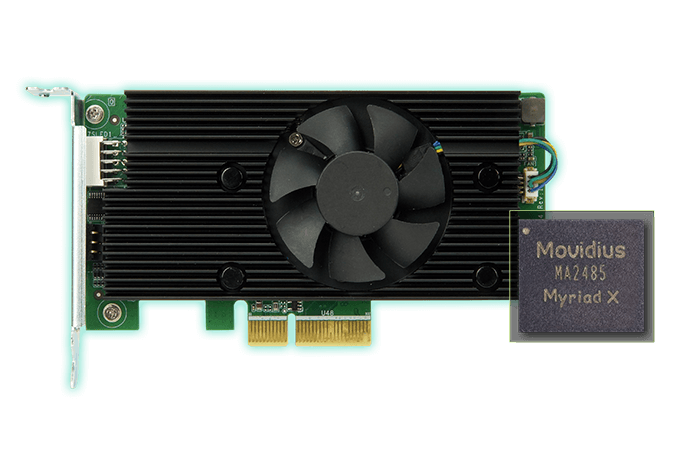

Mustang-V100-MX4 [ KC인증 ]

Overview

•Operating Systems

Ubuntu 16.04.3 LTS 64bit, CentOS 7.4 64bit , Windows® 10 64bit

•OpenVINO™ toolkit

◦Intel® Deep Learning Deployment Toolkit

- Model Optimizer

- Inference Engine

◦Optimized computer vision libraries

◦Intel® Media SDK

◦Current Supported Topologies: AlexNet, GoogleNetV1/V2, MobileNet SSD, MobileNetV1/V2, MTCNN, Squeezenet1.0/1.1, Tiny Yolo V1 & V2, Yolo V2, ResNet-18/50/101

* For more topologies support information please refer to Intel® OpenVINO™ Toolkit official website.

◦High flexibility, Mustang-V100-MX4 develop on OpenVINO™ toolkit structure which allows trained data such as Caffe, TensorFlow, MXNet, and ONNX to execute on it after convert to optimized IR.

-

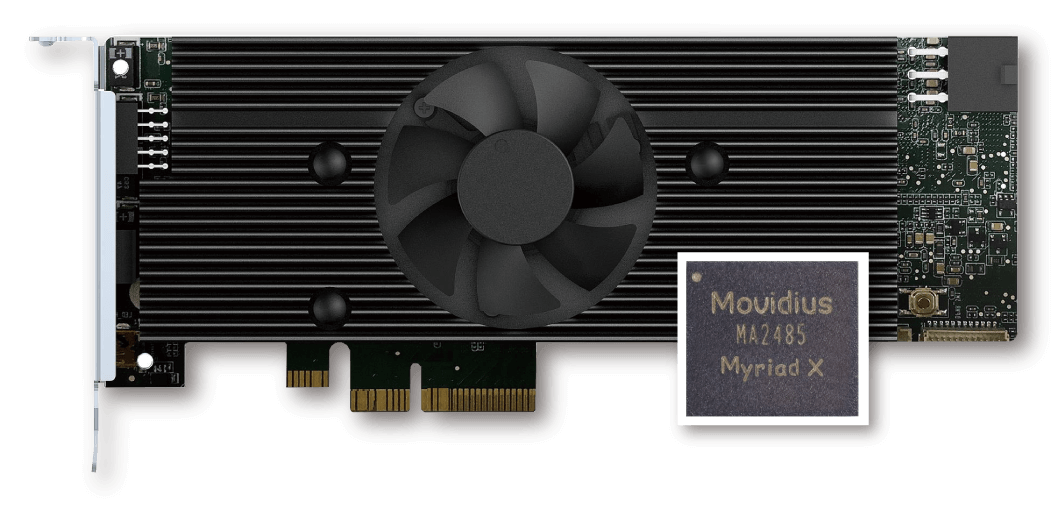

Mustang-V100-MX8 [ KC인증 ] [ 단종 ]

Overview

•Operating Systems

Ubuntu 16.04.3 LTS 64bit, CentOS 7.4 64bit, Windows 10 64bit.

•OpenVINO™ Toolkit

◦Intel® Deep Learning Deployment Toolkit

- Model Optimizer

- Inference Engine

◦Optimized computer vision libraries

◦Intel® Media SDK

◦*OpenCL™ graphics drivers and runtimes.

◦Current Supported Topologies: AlexNet, GoogleNetV1/V2, MobileNet SSD, MobileNetV1/V2, MTCNN, Squeezenet1.0/1.1, Tiny Yolo V1 & V2, Yolo V2, ResNet-18/50/101

- For more topologies support information please refer to Intel® OpenVINO™ Toolkit official website.•High flexibility, Mustang-V100-MX8 develop on OpenVINO™ toolkit structure which allows trained data such as Caffe, TensorFlow, and MXNet to execute on it after convert to optimized IR.

*OpenCL™ is the trademark of Apple Inc. used by permission by Khronos

-

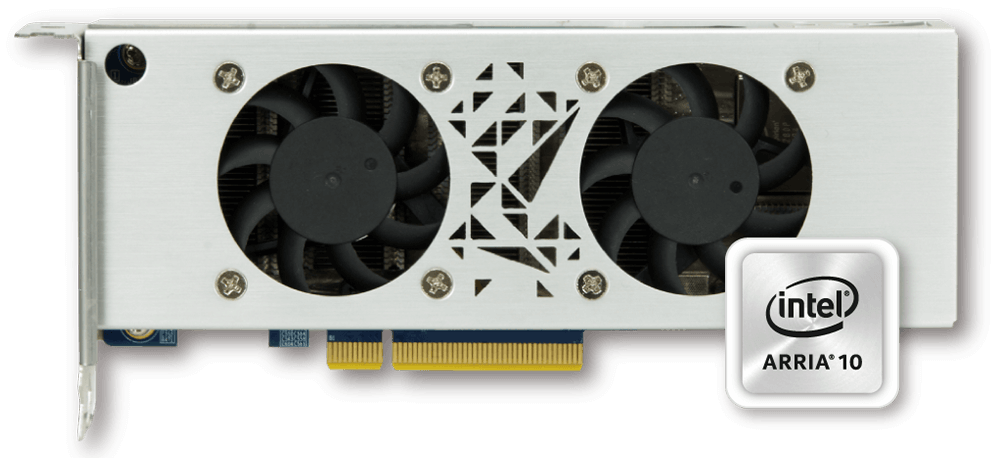

Mustang-F100-A10 [ SAMPLE ]

Overview

•Operating Systems

Ubuntu 16.04.3 LTS 64bit, CentOS 7.4 64bit (Support Windows 10 in the end of 2018 & more OS are coming soon)

•OpenVINO™ toolkit◦Intel® Deep Learning Deployment Toolkit

- Model Optimizer

- Inference Engine◦Optimized computer vision libraries

◦Intel® Media SDK

◦*OpenCL™ graphics drivers and runtimes.

◦Current Supported Topologies: AlexNet, GoogleNet, Tiny Yolo, LeNet, SqueezeNet, VGG16, ResNet (more variants are coming soon)

◦Intel® FPGA Deep Learning Acceleration Suite•High flexibility, Mustang-F100-A10 develop on OpenVINO™ toolkit structure which allows trained data such as Caffe, TensorFlow, and MXNet to execute on it after convert to optimized IR.

*OpenCL™ is the trademark of Apple Inc. used by permission by Khronos